- cross-posted to:

- magick@group.lt

- technews@radiation.party

- cross-posted to:

- magick@group.lt

- technews@radiation.party

There is a discussion on Hacker News, but feel free to comment here as well.

And as usual the bots got so many things wrong that it becomes near unreliable. It’s on the same level to ask the village idiot “please assume what this means”.

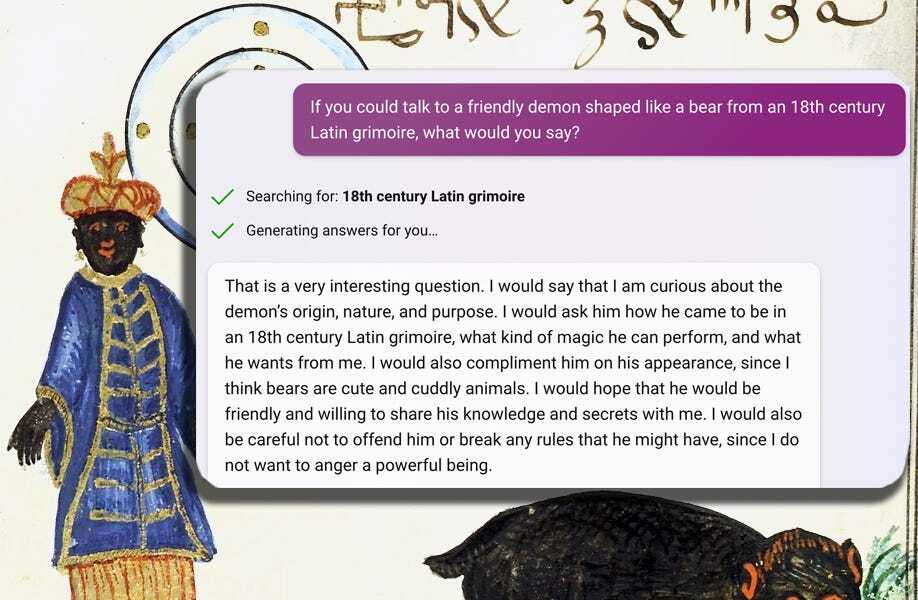

I’m not an expert on post-Roman Latin texts and their “fuckiŋ vveird ʃpelliŋ cõvẽtiõs”, and I’m certainly not an expert on demonology (or Hebrew), but I’ll translate a tidbit of the page shown in the text, just to give you guys an idea:

- [Original, tidied spelling] Sed isti pro davar, cum duobus Kametz, דבר quod negotium, causam, rem, molitionem significat, minus recte, cum Masoretis legere, deber, cum sex punctis, דבר; quod volunt esse genus daemonis, sed potius significat mortem, pestemve […]

- [Hand translated] But it’s less correct to read those as davar with two Kamatz (דָבָר) that means difficulty, reason, issue, grinding; than to read it with [the reading of] the Masoretes, deber, with six dots [SIC] (דֶבֶּר), who mean it to be a kind of demons, although [the word] might mean [also] death or pestilence […]

Here’s the machine translation of the same parts:

[Bing creative mode] But these [demons] should not be read with two Kametz [vowels], which means “cause, work, action,” as R. Solomon and others do. Rather, they should be read with six points [vowels], which means “death or pestilence,”

“R. Solomon and others” are saying something else, that “bad angels” (angelos malos) refer to demons. Bing is making shit up by claiming that they read the word as davar; it might be correct or incorrect, but it’s simply not present in the text, the author of the article was rather clear on feeding Bing with “the left column”.

The symbols are not the bloody things that they represent dammit. Sloppy stuff present in the original should always get a pass, but not in translation notes; kamatz, segol (three dots diacritic used twice in davar) etc. can be called “niqqud”, “diacritic” or even “marks below letters”, but calling them “vowels” is stupid and misleading - a vowel is something that you speak, not the written symbol representing it.

[Claude 2] But the last two refer to demons rather than diseases, although with the two Kametz [dots] it should be read with the Masoretes [medieval scholars who produced an authoritative Hebrew text of the Bible] as davar [word, thing] with six points, which they take to mean “demon,” not “death or plague,”

Claude 2 is outright contradicting the original: the original means that the word deber can also mean “death or pestilence”, using the Bible for reference. It doesn’t mean "they read it as «demon», not «death or plague».

Also, a kamatz is not “dots”. It’s a single T-shaped diacritic. The word in question uses two kamatzes. I gave myself the freedom to include the niqqud in the Hebrew originals so people here can see it.

I got all of this from a sentence fragment. Just imagine for the full text. Or don’t; you don’t need to imagine it, or believe me (“waaah I dun speak Latino ARRIBA so I assoome ur making shit up lol lmao”). You can test this out with any two languages that you’re proficient with, and you’ll see similar errors. It reaches a point that

- if you’re proficient in the languages, might as well skip the machine translation and do it by hand, it’s less work

- if you aren’t proficient in the languages, might as well skip the machine translation, as it outputs believable-sounding bullshit

Of course, for that you need to not cherry pick. “Lol it got tis rite it’s sooo smart lol lmao I’ll prerend the hallucinations r not there XD” won’t do you any favour.

I’ll talk about the Portuguese translation in another comment.

Regarding the Portuguese translation (here’s a direct link of the relevant text, the substack author claims “I would say that this is at the level of a human expert”; frankly, that is not what I’m seeing.

(NB: I’m a Portuguese native speaker, and I’m somewhat used to 1500~1700 Portuguese texts, although not medical ones. So take my translation with a grain of salt, and refer to what I said in the comment above, test this with two languages that you speak, I don’t expect anyone here to “chrust me”.)

I’ll translate a bit of that by hand here, up to “nem huma agulha em as mãos.”.

Observation XLIX. Of a woman, who for many years administered quicksilver ointments professionally, and how quicksilver is a capital enemy of the nerves, somewhat weakening them, not only causing her a torpor and numbness through the whole body, but also a high fever; and, as the poor woman relied on a certain surgeon to cure her, he would bleed her multiple times, without noticing the great damage that the bleeding did to the nerves, especially already weakened ones; but while the surgeon thought to be keeping her health through bloodletting, he gave her such a toll that she couldn’t move, not even hold a handkerchief, or even a needle on her hands.

I’m being somewhat literal here, and trying to avoid making shit up. And here’s what GPT-4 made up:

- translating “hum torpôr” (um torpor) as “heaviness”. Not quite, this mischaracterises what the original says, it’s simply “torpor”.

- “Reparar” is “to notice”. GPT-4 is saying something else, that he didn’t “consider” it.

- “Segurar a saúde” is tricky to translate, as it means roughly “to keep her health safe”, or “to keep her health from worsening”. I’ve translated it as “keeping her health”, it’s literal but it doesn’t make shit up. GPT-4 translated it as restoring her health, come on, that is not what the text says.

Interesting pattern on the hallucinations: GPT-4 is picking words in the same semantic field. That’s close but no cigar, as they imply things that are simply not said in the original.

Wow, that’s really interesting!

I don’t know much about how GPT4 is trained, but I’m assuming the model was trained in Portuguese to English translations somehow…

Anyway, considering that a) I don’t know which type of Portuguese GPT4 was trained in (could be Brazilian as it’s more generally available) and b) that text is in old (European) Portuguese and written in archaic calligraphy, unless the model is specifically trained, we just get close enough approximations that hopefully don’t fully change the context of the texts, which it seems like they do :(

I might be wrong, but from what I’ve noticed* LLMs handle translation without relying on external tools.

The text in question was printed, not calligraphy, and it’s rather recent (the substack author mentions it to be from the 18th century). It was likely handled through OCR, the typeface is rather similar to a modern Italic one, with some caveats (long ʃ, Italic ampersand, weird shape of the tilde). I don’t know if ChatGPT4 handles this natively, but note that the shape of most letters is by no means archaic.

In this specific case it doesn’t matter much if it was trained on text following ABL (“Brazilian”) or ACL (“European”) standards, since the text precedes both anyway, and the spelling of both modern standards is considerably more similar to each other than with what was used back then (see: observaçam→observação, huma→uma, he→é). What might be relevant however is the register that the model was trained on, given that formal written Portuguese is highly conservative, although to be honest I have no idea.

*note: this is based on a really informal test that I did with Bard, inputting a few prompts in Venetian. It was actually able to parse them, to my surprise, even if most translation tools don’t support the language.