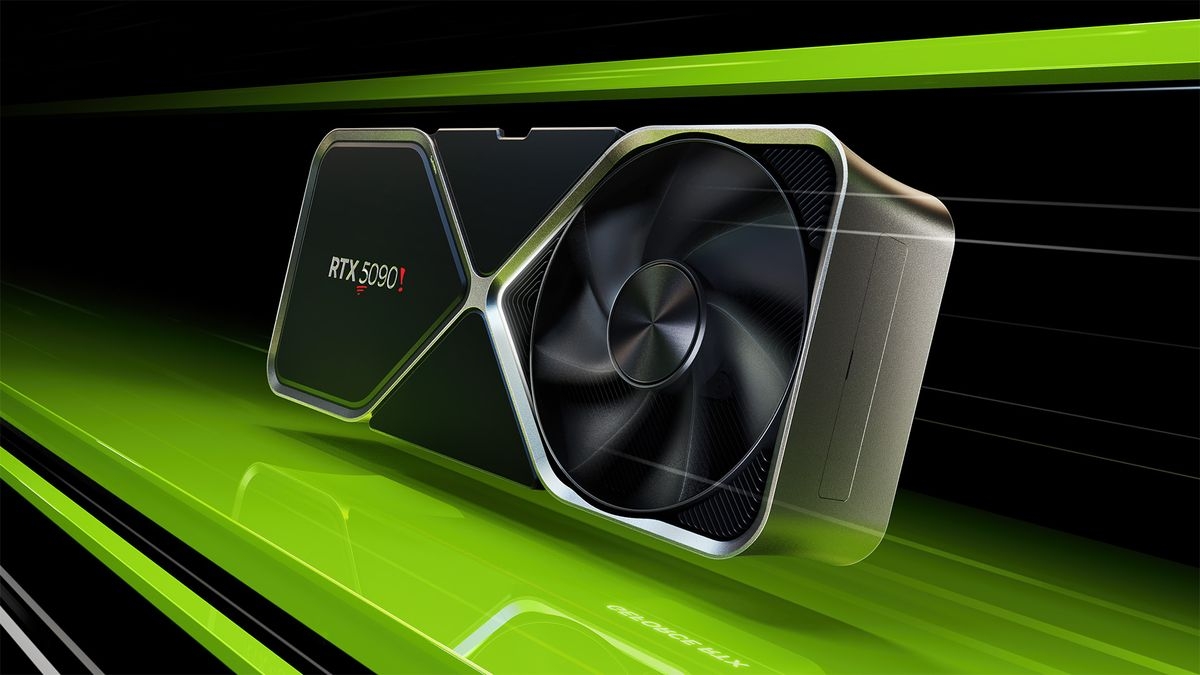

Cool cool cool. More cards I can’t afford.

But maybe lower prices on used 4090s?

Mmm vram, so hungry for vram.

Mmm vram! I love vram! Yum yum vram! I wanna eat vram!

Happy with my 4080 for now, hoping there will be a miracle, and superwide micro led 4k monitors will come out in the next few years lol.

I am on a 3080 (with a 1440 monitor). I am considering a monitor upgrade (current one is 75 Hz max), but I have no interest in a new GPU or moving to 4K (for now).

The 3080 might be the perfect card for high refresh rate 1440p gaming, so I’d definitely recommend the upgrade. My main monitor is a 175hz 1440p oled ultrawide, which my 4080 handles decently well, but definitely wouldn’t wanna go 4k on it since I’d have to turn settings down a lot more, even for just a stable 60 fps.

I have a few requirements for my next monitor, since I want it to last a long time:

- Micro-led, since it’ll have the benefits of oled without much risk of burn-in.

- 4k, because if I’m gonna have it for 10 or more years, I want it to look as good as possible, and 4k is very future-proof.

- Superwide, because I want to replace my three monitors with just the one, as multi-monitor support isn’t great.

There will probably be 4k micro-led TVs long before there are monitors, but I don’t want a TV as it’s just terrible ergonomics for use on a desk.

32gb VRAM would suck. Then I’ll not buy a 5090. A 5080 with only 16GB of GDDR7 would also suck. Better get a 3090 with 24gb then.

Are you being sarcastic? :)

Compared to 24gb we had in 2020 that’s barely an upgrade. I’m not joking, AI stuff needs more VRAM. 32gb is too little for the price they’ll ask for. Right now VRAM is more important than pure power, you can’t use larger models when your VRAM bottlenecks.

Edit: oh I get it. I mentioned AI my bad.

We had GPUs with 24GB in 2017, go buy a pro one if you do AI and need that much RAM.

I mean, those cards are for gaming, right?

RTX3090 with 24gb VRAM was the best you could get as consumer GPU. Not sure which 2017 GPU you mean but it certainly was a completely different price range and not for gaming. It would be the same as comparing it to an A100, makes no sense.

A lot of people can’t afford two PCs or a GPU that costs 10 times as much, so obviously the GPU needs to be good for gaming and other stuff. I only voiced my opinion after all. If you don’t need more VRAM, that’s fine. I however don’t have an unlimited wallet and VRAM should be higher for the asking price they have, but again, you can disagree if you think it’s fine.

Titan Z in its full glory was Tesla K80 & shipped with 24GB RAM in 2014.

Titan RTX had 24 ramsies in 2018.

If RAM is what you need, and don’t wanna download it, you “can” buy an H100 with 80GB RAM (2022, Hopper architecture), they sell it on a PCI card too.

It costs some real cash-moneys tho.

In the realm of still imaginable GPU prices, Quadro usually offered more RAM, eg currently sold is the RTX 6000 Ada, comes with 48 gigirammers.

On consumer gaming cards nVidia has need horrible with RAM for a few gens now, I dont care about AI (on my desktop I use it once per year & on my servers & don’t even run it on GPUs bcs of how little I need it), def not enough gRAM for gaming imho, need some gigs as buffer for other shit.

I know there have been non gaming GPUs with so much VRAM, but that’s beside the point.

Titan is considered consumer, Quadro workstation, Tesla enterprise/datacentre.

I didn’t give it much thought, but I would consider AI (that needs more than low- or mid-consumer vRAM) the domain of Quadro.

No problem, but I mean if you’re just tinkering around then you could do with even less memory as long as the model stays in it and you sample small pieces in small batches.

We all had P series gpus and we had to buy up because the trainees model didn’t fit in 16GB (they had probably too much money) so I don’t remember what card it was for the 24GB.

For just tinkering around one could use SD1.5 with a 4GB VRAM GPU and stop after a few minutes. I spend quite some time on AI image generation, like on average 4 hours per day since over a year now. New models, especially video AI generation will need more VRAM, but since I don’t do this commercially, I can’t just pay 30k for a GPU.

The only thing keeping 4080(and 5080) cards “reasonably” priced is the fact that they only have 16GB, therefore they arent that good for ai shit. You dont need more than 16gB vram for gaming. If those cards had more vram, the ai datacenters would pick them up, keeping their price even higher than it is.

I have a 7900xt and was using over 17gig in Jedi Survivor. No ray tracing, no frame gen. Just raw raster and max AA.

Granted, that’s because that game is so horribly optimized. But still… I used more than 16gig.

You kinda can… Nvidia card users have been having the toughest time with the Hunt Showdown update because CryEngine is happily gobbling up VRAM. For AMD cards it’s not a problem but various Nvidia card owners have been having bad experiences running at the resolutions they normally do.

Maybe 16GB is the number where things are okay, I haven’t heard complaints on cards above 12GB. However, point being… Nvidia being VRAM stingy has bit some folks and at least one game developer.

Still 32 seems EXCESSIVE.

nVidia & production yields decide/plan how much RAM they are gonna give them.

If it made financial sense (a market existed) nVidia would stop making desktop cards overnight.

Its very low imo if you want 4k gaming to work

For VR, you do already.