You could use ShareX screen record then upload to Streamable. Can you also provide the thread/chat export that is faulty so I can try to replicate the problem on my end?

Doing Perchance.org things…

Links

You could use ShareX screen record then upload to Streamable. Can you also provide the thread/chat export that is faulty so I can try to replicate the problem on my end?

I’d recommend waiting for a couple of seconds before reloading/closing the page to make sure the image is ‘converted/saved’ on the chat. I tried clicking the keep then immediately reloading the page (even closing the page as soon as I clicked ‘keep’), but it managed to keep the image. Not sure what other factors might affect it saving.

If you could provide a video demo of the problem with the console open, it would be helpful to see if there are problems with the code.

Glad to help!

After the declaration of ‘odds’ any other text after it is invalid e.g.

Ss^0.5 or s

Tt^0.5 || t

If you want a randomized value for that in which Tt has odds of 0.5 to being chosen you could have:

{Ss^0.5|s}

{Tt^0.5|t}

If you want to add ‘odds’ with HTML formatting, you should place the odds after the items e.g.

<u>Ag^0.5</u>

to

<u>Ag</u> ^0.5

The change in odds should always be on the end of the item.

On your [] you are setting the value of m to be the joined items in a string, not the list. To fix it you just need to store it on the variable, then use the joinItems on the variable after: []

Then to output the names: [] in which it would iterate on the markings on m and get their name, then joinItems them.

The link of your generator is the URL of your page: https://perchance.org/your-url-page

I haven’t really had a long conversation and multiple topics in one bot, so I don’t have much experience in manipulating the bot’s behavior.

I would suggest adding a reminder on the character’s Reminder Message to steer the AI’s response. Another would be using the /ai <reply instruction> method in which you instruct what the AI would reply. You could also probably try to edit the Memories and summaries that the AI generated that it uses in generating the reply and you could check the memory that pushes the AI to reply that way by clicking the brain icon that show up upon hover in the message on the bottom right.

There are a lot more people knowledgeable in telling the AI what to do in the #ai-character-chat channel in Perchance Discord.

@perchance@lemmy.world - pinging dev for other info that I’ve probably missed.

Possibly missing ending quotations?

Before the "negative", you didn’t close the quotation then you used a dot instead of a comma in transitioning to the negative

...

"Style Name":{

"prompt": "...",

"negative": "..."

},

...

If you are trying to run the ‘ai-character-chat’ on glitch, you wouldn’t be able to do so. Since AI plugins doesn’t work in other domains, only in Perchance. I suggest looking into the OpenCharacters which is Perchance based off of.

I tested it, and it seemed to be working properly, possibly something on the AI’s response inside the <image> tag that borked the code?

Can you send me the character link again. I would assume that you do not have [] on the prompt or [] on negative on the pasted style like so:

...

"Professional Photo": {

// When adding a new prompt, you need to add [input.description] to help the code know what would be the prefix and suffix of the prompt. [input.negative] is not as required.

prompt:

"[input.description], {sharp|soft} focus, depth of field, 8k photo, HDR, professional lighting, taken with Canon EOS R5, 75mm lens",

negative:

"[input.negative], worst quality, bad lighting, cropped, blurry, low-quality, deformed, text, poorly drawn, bad art, bad angle, boring, low-resolution, worst quality, bad composition, terrible lighting, bad anatomy",

},

...

I think frequency of access, and maybe a habit of turning to the resources page to look for the plugins/templates.

I think that the resources page is an ‘all-in-one’ page for a lot of things related to Perchance that isn’t on the ‘tutorial’. I’ll ping @eatham@lemmy.world for other opinions.

While we’re at it, maybe also create a ‘notice/terms and conditions’ regarding the use of AI on the platform. A lot of people are also asking for this, although I just redirect them to the AI FAQ that I compiled and directly on the plugin pages. Maybe an official one so it is compiled and in one place.

Also, maybe a very long shot, and may be breaking for the user/account data (or maybe just tie it to local storage). An option to customize the navbar through account settings? Like we only want the ‘generators’, and ‘hub’ to button to show on the navigation as well as hiding the ‘ai helper’ permanently on the HTML panel than just minimizing it.

Not sure, I don’t really dabble on the NSFW content, so I won’t know lmao.

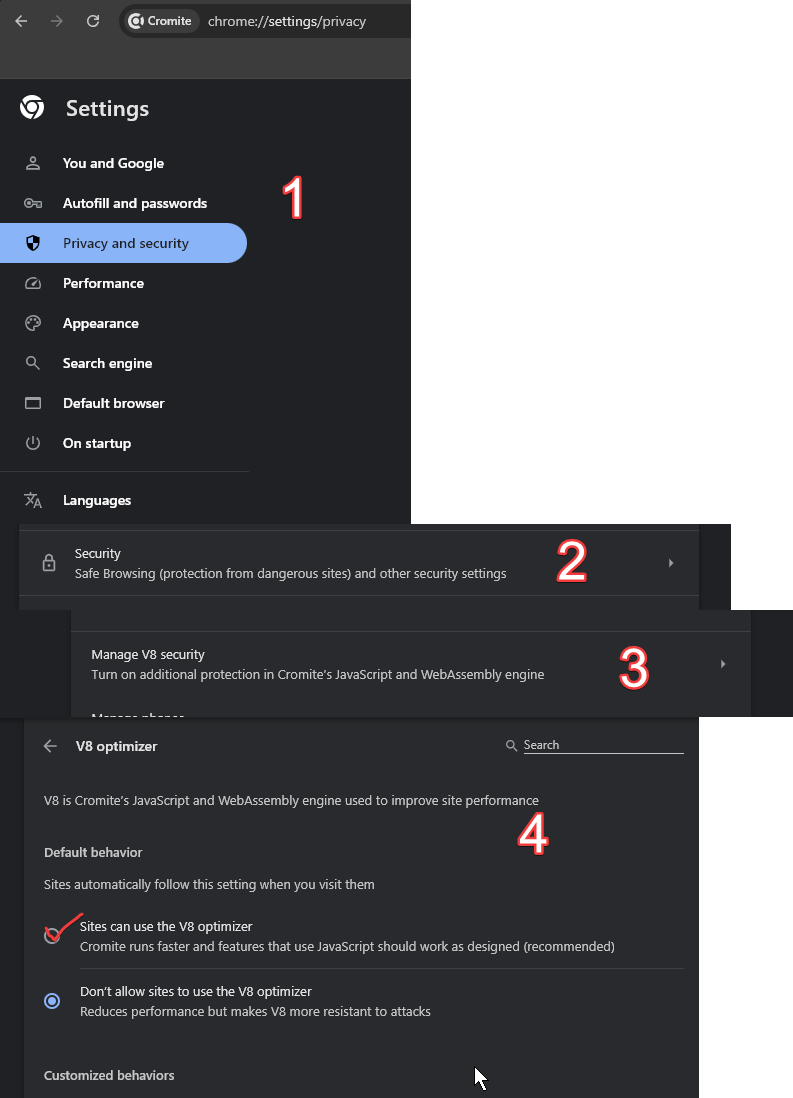

Upon downloading the Cromite, I was able to fix the WebAssembly issue by enabling something called V8 (you could also just search “WebAssembly” on the search bar and it should highlight the setting):

Also some video proof. You could also probably just add Perchance to the list of sites that allows V8 instead of enabling it globally.

No problem! It was actually an oversight on my part lmaooo

Here is an updated code in the AI Artist.

It seems that there was a problem in setting the properties in the character itself, in which those properties are already existing in the AI Artist character.

But since you’ve transferred the code to another character, those properties are undefined and cannot be accessed so it cannot change it. I’ve managed to fix it by just setting default values.

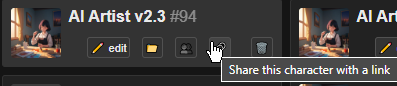

You can share the character link like this:

This won’t share any threads, just the character.

I’m not really sure, have you added a new style to it, maybe some syntax errors that doesn’t allow the custom code to run? Can you link a ‘share link’ of the character?

I’ve added a fix here to not say ‘AI Artist (style)’ but ‘Character Name (style)’ and the styles should be added after clicking the button on the /change-style, although you still need to edit/create a new image to apply the style.

There seems to be a problem with ‘installing’ the required packages (something like stdlib) for it on the web, even if the script itself is loaded. @perchance pinging dev so they can take a look at it.