- 3 Posts

- 43 Comments

1·1 month ago

1·1 month agoHoly shit that’s completely wrong.

It’s for sure AI generated articles. Time to block softonic.

7·3 months ago

7·3 months agoThis is a weird take given that the majority of projects relevant to this article are massive projects with hundreds or thousands of developers working on them, over time periods that can measure in decades.

Pretending those don’t exist and imagining fantasy scenarios where all large projects are made up of small modular pieces (while conveniently making no mention to all of the new problems this raises in practice).

Replace functions replace files and rewrite modules, that’s expected and healthy for any project. This article is referring to the tendency for programmers to believe that an entire project should be scrapped and rewritten from scratch. Which seems to have nothing to do with your comment…?

41·3 months ago

41·3 months agoThis thread is a great example to why despite sharing knowledge we continually fail to write software effectively.

The person you’re arguing with just doesn’t get it. They have their own reality.

1·3 months ago

1·3 months agoI have a weird knack for reverse engineering, and reverse engineering stuff I’ve written 7-10 years ago is even easier!

I tend to be able to find w/e snippet I’m looking for fast enough that I can’t be assed to do it right yet 😆

That’s one of the selling points, yep

3·3 months ago

3·3 months agoTo be fair Microsoft has been working on Garnet for something like 4+ years and have already adopted it internally to reduce infrastructure costs.

Which has been their MO for the last few years. Improve .Net baseline performance, build high performance tools on top of it, dog food them, and then release them under open source licenses.

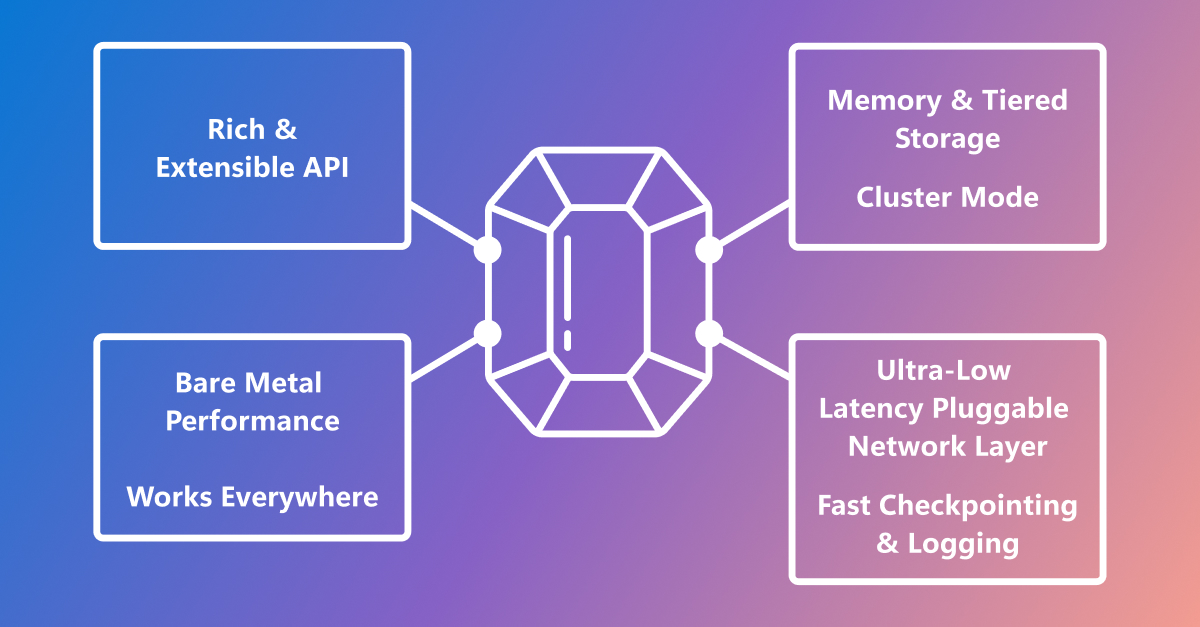

Great timing that Microsoft just released a drop-in replacement that’s in order of magnitude faster: https://github.com/microsoft/garnet

Written in C# too, so it’s incredibly easy to extend and write performant functions for.

It needs to be a bit more deployable though but they only just opened the repo, so I’ll wait.

3·3 months ago

3·3 months agoThe designers as seen by designers is so right.

Nothing they come up with can be wrong, it’s all innovative!!

.Net 8 will work on Linux just fine. But winforms will not, it’s specifically a legacy windows-only UI framework.

You’re going to have to jump through some incredible hoops to get it to work on Linux. Which are definitely not part of your normal curriculum.

C# on non-Windows is not impossible, but it’s going to require effort infeasible for school projects like that one.

You mean winforms (The windows specific UI) on non-Windows? Otherwise this is incredibly misleading, and plain wrong.

C# in non windows is the norm, the default even, these days. I build, compile, and run, my C# applications in linux , and have been for the last 5+ years.

IMHO it’s unnecessary at this juncture, and further fragments already vastly under engaged communities (.Net & C#)

Posts about .Net & friends fit into the .Net community. It’s not so busy that a new community needs to break off to direct traffic & posts to.

This is actually a common failing point/pain point for low traffic or “growing phase” communities & platforms. Fragmentation reduces engagement, and below a certain threshold it just straight dies. Avoiding unnecessary fragmentation until such time as it serves a purpose helps communities grow faster.

To highlight this: the number of mods you are suggesting this community should have to handle TZ coverage is more than the average number of comments on posts in the .Net community today…

14·7 months ago

14·7 months agoI go full chaos and look up where I last used it when I need a snippet…

The follow on. Lots and LOTS of unrelated changes can be a symptom of an immature codebase/product, simply a new endeavor.

If it’s a greenfield project, in order to move fast you don’t want to gold plate or over predictive future. This often means you run into misc design blockers constantly. Which often necessitate refactors & improvements along the way. Depending on the team this can be broken out into the refactor, then the feature, and reviewed back-to-back. This does have it’s downsides though, as the scope of the design may become obfuscated and may lead to ineffective code review.

Ofc mature codebases don’t often suffer from the same issues, and most of the foundational problems are solved. And patterns have been well established.

/ramble

There is no context here though?

If this is a breaking change to a major upgrade path, like a major base UI lib change, then it might not be possible to be broken down into pieces without tripping or quadrupling the work (which likely took a few folks all month to achieve already).

I remember in a previous job migrating from Vue 1 to Vue 2. And upgrading to an entirely new UI library. It required partial code freezes, and we figured it had to be done in 1 big push. It was only 3 of us doing it while the rest of the team kept up on maintenance & feature work.

The PR was something like 38k loc, of actual UI code, excluding package/lock files. It took the team an entire dedicated week and a half to review, piece by piece. We chewet through hundreds of comments during that time. It worked out really well, everyone was happy, the timelines where even met early.

The same thing happened when migrating an asp.net .Net Framework 4.x codebase to .Net Core 3.1. we figured that bundling in major refactors during the process to get the biggest bang for our buck was the best move. It was some light like 18k loc. Which also worked out similarly well in the end .

Things like this happen, not that infrequently depending on the org, and they work out just fine as long as you have a competent and well organized team who can maintain a course for more than a few weeks.

Just a few hundred?

That’s seems awfully short no? We’re talking a couple hours of good flow state, that may not even be a full feature at that point 🤔

We have folks who can push out 600-1k loc covering multiple features/PRs in a day if they’re having a great day and are working somewhere they are proficient.

Never mind important refactors that might touch a thousand or a few thousand lines that might be pushed out on a daily basis, and need relatively fast turnarounds.

Essentially half of the job of writing code is also reviewing code, it really should be thought of that way.

(No, loc is not a unit of performance measurement, but it can correlate)

1·8 months ago

1·8 months agoSomeone who shares their experiences gained from writing real world software, with introspection into the dynamics & struggles involved?

Your age (or mostly career progression, which is correlated) may actually be a reason you have no interest in this.

4·8 months ago

4·8 months agoLike most large conceptual practices the pain comes when it misused, mismanaged, and misunderstood.

DDD like Agile, when applied as intended, adds more to success than it detracts. This means that others take it and try to use it as a panacea, and inappropriately apply their limited and misunderstood bastardization of it, having the opposite effect.

Which leads to devs incorrectly associating these concepts & processes to the pain they have, instead of recognizing a bad implementation as a bad implementation.

Personally, I’ve found great success by applying DDD where necessary and as needed, modifying it to best fit my needs. (Emphasis mine). I write code with fewer bugs, which is more easily understood, that enforces patterns & separations that improve productivity, faster than I ever have before. This is not because I “went DDD”, it’s because I bought the blue book, read it, and then cherry picked out the parts that work well for my use cases.

And that’s the crux of it. Every team, every application, every job is different. And that difference requires a modified approach that takes DevX & ergonomics into consideration. There is no one-size-fits-all solution, it ALWAYS needs to be picked at and adjusted.

To answer your question

Yes, I have had lots of pain from DDD. However, following the principals of pain driven development, when that pain arises we reflect, and then change our approach to reduce or eliminate that pain.

Pain is unavoidable, it’s how you deal with it that matters. Do you double down and make it worse, or do you stop, reflect, fix the pain, refactor, and move on with an improved and more enlightened process?

It’s literally just “agile”, but for developer experience.

1·8 months ago

1·8 months agodeleted by creator

1·8 months ago

1·8 months agoSystem.Text.Json routinely fails to be ergonomic, it’s quite inconvenient overall actually.

JSON is greedy, but System.Text.Json isn’t, and falls over constantly for common use cases. I’ve been trying it out on new projects every new releases since .net core 2 and every time it burns me.

GitHub threads for requests for sane defaults, more greedy behavior, and better DevX/ergonomics are largely met with disdain by maintainers. Indicating that the state of System.Text.Json is unlikely to change…

I really REALLY want to use the native tooling, that’s what makes .Net so productive to work in. But JSON handling & manipulation is an absolute nightmare still.

Would not recommend.

The ecosystem is really it, C# as a language isn’t the best, objectively Typescript is a much more developer friendly and globally type safe (at design time) language. It’s far more versatile than C# in that regard, to the point where there is almost no comparison.

But holy hell the .Net ecosystem is light-years ahead, it’s so incredibly consistent across major versions, is extremely high quality, has consistent and well considered design advancements, and is absolutely bloody fast. Tie that in with first party frameworks that cover most of all major needs, and it all works together so smoothly, at least for web dev.