Currently trying to refund the new Indiana Jones game because it’s unplayable without raytracing  . My card isn’t even old, it’s just 8GB of VRAM is the absolute minimum apparently so my mobile 3060 is now useless. I miss when I used to be able to play new games in 2014 on my shitty AMD card at 20fps, yeah it didn’t look great but developers still included a very low graphics option for people like me. Now you need to be upgrading every 2 years to keep up.

. My card isn’t even old, it’s just 8GB of VRAM is the absolute minimum apparently so my mobile 3060 is now useless. I miss when I used to be able to play new games in 2014 on my shitty AMD card at 20fps, yeah it didn’t look great but developers still included a very low graphics option for people like me. Now you need to be upgrading every 2 years to keep up.

I’m having the opposite experience with Indiana Jones, I expected it to be unplayable on my mobile 3060 but in fact it runs great. I had to set a weird launch option that I found on protobdb but once I did that it went from unplayable under 10fps to a smooth 60fps in 1080p

Try enabling this setting

__GL_13ebad=0x1The price of storage dropping quickly ruined optimization, so things turned from “how do I get the most out of a tiny bit of space” to “Fuck you. Upgrade your gear!”

that one is atrocious but another thing i also find nasty is the amount of disk space new games need. sure, buying more disks is way cheaper than getting more graphical power but downloading +100Gb for a game I might just play once feels like an incredible waste

games should have a lo-fi version where they use lower textures and less graphical features for the people that cannot actually see the difference in graphics after the ps2 era

it’s not just texture quality, game devs also throw in TONS of unused assets that just live on the disk and don’t do anything. i think GTA V is about half just unused assets.

Rainbow 6 Siege had to downgrade map assets because the skins take up too much space lol

Cosmetics is a wholly separate clown-show. Dota 2 used to be a few gigabytes in space. Now because of all the hats it’s like 30gb compressed.

17·9 days ago

17·9 days agoWar Thunder (garbage game, don’t play) does this. You can choose to download higher quality textures. I don’t care, I haven’t noticed the difference

The snail yearns for your money

8·9 days ago

8·9 days agoNever. Always ftp

Same, I managed to get to BR 8.7 in the Soviet ground tree as a free-to-play, but I stopped making progress because the higher BR matches just aren’t that fun so I stick around in 3.7-4.0 and gain like no research points, lol

7·9 days ago

7·9 days agoReal. I have my first 8.0 in soviet, but its just a grind, no matter what br I play. 2.7 or so is my go to

war thunder bad?

10·9 days ago

10·9 days agoGarbage game. Yet I continue to play

War thunder bad.

damn, I like my little russian planes

There’s been a couple games I’ve decided to just not buy because the disk space requirement was too high. I don’t think they care much about a single lost sale, unfortunately.

There are some good videos out there that also explain how UE5 is an unoptimised mess. Not every game runs on UE5 but it’s the acceptable standard for game engines these days

That and DX12 in general, in my experience. Almost every game where I’ve had the option to use DX11 instead of DX12, the difference has been night and day. Helldivers 2 especially had an absurd improvement for me.

Can you link some, that sounds very interesting.

I also would like to see them

This is the main one I saw. It’s kind of an as for this guy’s game company, but clouds in a skybox shouldn’t cause performance issues https://youtu.be/6Ov9GhEV3eE

I found a YouTube link in your comment. Here are links to the same video on alternative frontends that protect your privacy:

It’s optimized around dev costs and not performance, sadly.

I’m finding the latest in visual advancements feels like a downgrade because of image quality. Yeah all these fancy technologies are being used but its no good when my screen is a mess of blur, TAA, artifacting from upscaling or framegen. My PC can actually play cyberpunk with path tracing but i can’t even begin to appreciate the traced paths WHEN I CAN’T SEE SHIT ANYWAY.

Currently binging forza horizon 4 which runs at 60fps on high on my steam deck and runs 165fps maxed on my PC with 8x msaa and it looks beautiful. And why is it beautiful? Its because the image is sharp where I can actually see the details the devs put into the game. Also half life alyx another game that is on another level with crisp and clear visuals but also ran on a 1070ti with no issues. Todays UE5 screen vomit can’t even compare

All games these days know is stutter, smeary image, dx12 problems and stutter

TAA, dof, chromatic aberration, motion blur, vignetting, film grain, and lens flare. Every modern dev just dumps that shit on your screen and calls it cinematic. Its awful and everything is blurry. And sometimes you have to go into an ini file because it’s not in the settings.

Chromatic aberration! When I played R&C: Rift Apart on PS5 I was taking screenshots and genuinely thought there was some kind of foveated rendering in play because of how blurry the corners of the screen looks. Turns out it was just chromatic aberration, my behated.

Hate film grain too because I have visual snow and I don’t need to stack more of that shit in my games.

Dev: should we make our game with a distinctive style that is aesthetically appealing? Nah slap some noise on the screen and make it look like your character is wearing dirty oakleys and has severe astigmatism and myopia that’ll do it.

I despise TAA. I remember back when I played on PS4, I could immediately spot a UE4 game because they almost always had awful TAA ghosting.

I want my games to be able to be rendered in software, I want them to be able to run on a potato from the early 2000s and late 90s, is this too much for a girl to ask for

Todd Howard made Morrowind run on 64MB of RAM in a cave. With a box of scraps.

All of the boomer game devs that had to code games for like a 486 have now retired, replaced with people who nVidia or AMD can jangle shiny keys in front of to make their whole games around graphics tech like cloth physics and now ray tracing.

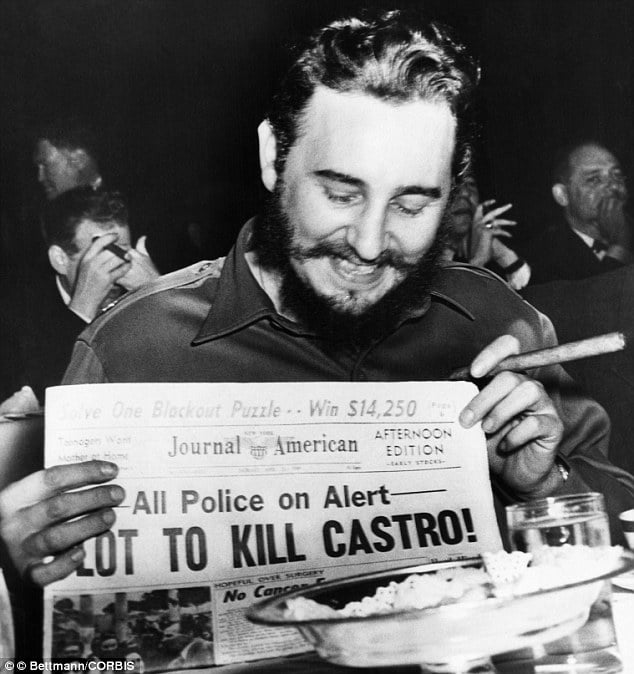

I just want to punch nazis why does it have to matter if the reflection of a pigeon off screen appears in Indiana Jones’ eyes??

Because it sells and they like monies

This is why solo or small team indie devs are the only devs I give a shit about. Good games that run well, are generally cheap, and aren’t bloated messes.

I just want ps2-level graphics with good art direction (and better hair, we can keep the nice hair) and interesting gameplay and stories. Art direction counts for so much more than graphics when it comes to visuals anyway. There are Playstation 1 games with good art direction that imo are nicer to look at than some “graphically superior” games.

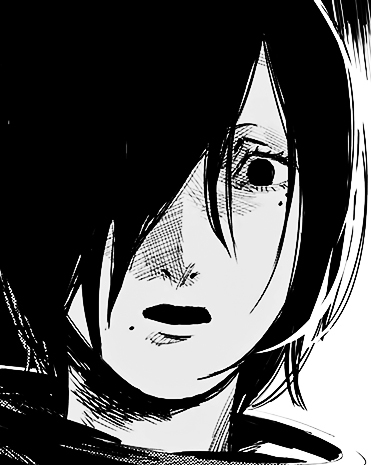

What hair in modern games looks like

when u see a Homo Sapiens for the first time

Yeah i would much rather a hairstyle be a single solid texture than whatever the fuck this “HAIRFX individual hair rendering 9000” bullshit is, that always ends up looking like trash

This is part of why I’ve pretty much stopped following mainstream releases. Had to return Space Marine 2 because it would not stop crashing and the low settings looked like absolute dogshit

I got a special fucking bone to pick with Cities Skylines 2. I’ve never had a game look like such vaseline-smeared ass while making my computer sound like it’s about to take off. It’s a shame because it’s definitely come a long way as a game and has some really nice buildings now, but to play it I start to get nervous after like half an hour and have to let my computer cool down, fuck that shit.

There’s a number of YouTube videos examining how CS2 was designed in such a shockingly bad way to murder your GPU

Yeah, I’m getting real fucking tired of struggling to get 60fps in new games even with DLSS cranked to max. They don’t even look much better. There’s plenty of older games that look better and run better that you don’t need to subject yourself to DLSS ghosting and frame gen latency to play. I’ve been telling my main co-op buddy that I might just stop playing new games (at least larger releases) because this shit is so frustrating.

DLSS created an excuse for developers to throw optimization to the side and just do whatever they please. I figured this is what would happen when it was created and it’s definitely happening now. I’m glad that I don’t play AAA games for the most part, cause this shit sounds annoying.

Unlimited 720p renderer on the dlss world

I decided I’m not gunna’ play any game that isn’t released on PS4 or Switch. My PC isn’t really powerful enough to play PS5-exclusive games anyway, so that sets a similar hard-limit. I was gunna’ decide no game post-2020 but this choice made more sense.

I remember reading an article by Cosmo about music/metal on his ex-blog Invisible Oranges about how since we have a limited amount of time on Earth we’re all making limits or acting within limits like these anyway. Some are just more conscious of it than others, so it shouldn’t be treated as weird for someone to consciously decide they want to engage with a hobby in a specific manner like this.

I’m in a similar boat but I’m letting my budget dictate what I play instead. Tekken 8 looks cool but why pay $70+DLC when I have Tekken 7, also very cool, for $6? I’m always a version behind but my wallet thanks me.

Also Peggle is extremely cheap and I’ve gotten an RPG number of hours out of that series.

if a game can’t run on everything people have run Doom on, i don’t want to play it

yes this includes the digital pregnancy test and the parking ticket validator

duke nukem: that’s a lot of

wordsVRAM. too bad i’m not buyin it.seriously, i hate this shit. i have a 1080 ti that i got used several years ago when the market had hit a bit of a lull and it’s got some firmware bug that stops it from running most modern games, even ones it h as enough vram to run. this is why indie games and old games that people are still making mods or private server sets for like cod4 are so great.

My 1080 kept having performance issues with each update. I had to revert to an older driver in order to get anything to work. Nvidia and whatever game devs blamed each other for the issue, so I haven’t figured out why it was happening.

My CPU is 12 years old and my GPU 7. So yeah… I’m gonna stick with indie and older games.

People were saying this about Morrowind

Yeah but they were right, Morrowind looks too good, every game should look like Cruelty Squad

They were kind of correct back then two with the amount of upgrading the industry would expect you to do. That just petered off there for a while, luckily. seems to be back in full force now though

That said, at least back then all the shit gave you actual functionalities as per graphics instead of like raytracing on retinas or some bullshit you’d never notice

I think that has to do with consoles: when a console generation is outdated mid or low range hardware that forces more general optimization and less added bullshit, especially when that generation drags on way too long and means devs are targeting what is basically a decade old gaming computer towards the end. When they’re loss leaders and there’s a shorter window between generations or upgraded same-generation versions, it means devs are only optimizing enough to run on a modern mid range gaming rig and specifically the console configuration of that.

Although there’s some extra stuff to it too, like the NVidia 10 series was an amazing generation of GPUs that remained relevant for like a decade, and the upper end of it is still sort of relevant now. NVidia rested on their laurels after that and has been extremely stingy with VRAM because their cash cow is now high end server cards for AI bullshit and they want businesses to buy $5000+ cards instead of <$1000 ones that would work good enough if they just had a bit more VRAM. GPUs have also gotten more and more expensive because of crypto and AI grifters letting NVidia know they can just keep raising prices and delivering less and people will still buy their shit, and AMD just grinning and following after them, delivering better cards at lower prices but not that much lower since they can get away with it too.

Can confirm